12 Sensation

Throughout this book, we have been emphasizing the everyday relevance of psychological principles. The modules on human sensation and perception will have a bit of a different feel to them. You might sometimes have difficulty keeping the relevance of these topics in mind, but for the exact opposite reason you might expect. It is not that sensation and perception are far removed from everyday life, but that they are such a basic, fundamental part of it. Seeing, hearing, smelling, tasting, or feeling the world are key parts of every single everyday experience that you have. Because they are so basic, though, we rarely give them a conscious thought. Thus, perceiving the outside world seems effortless and mindless. Effortless? Yes. Mindless? Not even close. Just beneath the surface, sensation and perception are an extremely complex set of processes.

That is what we find so interesting about sensation and perception. They involve processes so basic for our daily lives that it hardly seems worth calling them processes; you open your eyes and the world appears in front of you with no effort on your part. In reality, however, these processes require the work and coordination of many different brain areas and sensory organs.

Module 11 gave you a hint of the complexity of the brain. In Modules 12 and 13, you will see how some of the brain areas work together in complex processes like sensation and perception, which may be our most important brain functions. The thalamus, primary sensory cortex, primary auditory cortex, and primary visual cortex are all major parts of the brain. The visual cortex is so important that it occupies the entire occipital lobe. Indeed, a great many brain areas contribute directly to sensation and perception.

Module 12 covers sensation, the first part of the sensation and perception duo; it is divided into three sections. Section 12.1, Visual Sensation, begins to reveal the complexity of the visual system by showing you how even the “easy” parts of the process are literally more than meets the eye. Section 12.2, the other senses, describes the analogous sensory processes for the other senses. Section 12.3, Sensory Thresholds, describes the beginning stages of what the brain does with the signals from the world.

12.1 Visual Sensation

12.2 The Other Senses

12.3 Sensory Thresholds

READING WITH A PURPOSE

Remember and Understand

By reading and studying Module 12, you should be able to remember and describe:

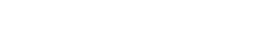

- Parts of the eye and functions: cornea, sclera, iris, pupil, lens, retina (12.1)

- How the retina turns light into neural signals: rods, cones, transduction, bipolar cells, ganglion cells, fovea, optic nerve (12.1)

- Seeing colors, brightness, and features: Young-Helmholtz trichromatic theory, opponent process theory, lateral inhibition (12.1)

- The auditory system: outer, middle, and inner ear, pinna, tympanic membrane, hammer, anvil, and stirrup, oval window, cochlea, hair cells, basilar membrane (12.2)

- How we sense pitch: frequency theory, place theory (12.2)

- Taste, olfaction, and touch: umami, taste buds, olfactory bulb (12.2)

- Pain sensation (12.2)

- Balance: propioception, vestibular system, otolith organs,semicircular canals (12.2)

- Absolute thresholds, difference thresholds, and signal detection theory (12.3)

Apply

By reading and thinking about how the concepts in Module 12 apply to real life, you should be able to:

- Come up with applications of difference thresholds and Weber’s Law (12.3)

- Come up with applications of signal detection theory (12.3).

Analyze, Evaluate, or Create

By reading and thinking about Module 12, participating in classroom activities, and completing out-of-class assignments, you should be able to:

- Note the parallels among the different sensory modalities (12.1 and 12.2)

12.1 Visual Sensation

Activate

- Based on your current knowledge of cameras and human vision, make a list of similarities and differences between the two.

- Imagine that you live in the wild and have to be able to find food and avoid predators in order to survive. What would be the most important properties of objects for you to be able to see?

Although our experience is that there is a single mental activity involved in perceiving the outside world, psychologists have traditionally distinguished between sensation and perception. Sensation consists of translating physical energy from the world into neural signals and sending those signals to the brain for further processing. Perception is the set of processes that ultimately allow us to interpret or recognize what those neural signals are. In this section, We will introduce you to visual sensation. The two main processes to describe are how the eye focuses light onto the retina at the back of the eye and how the retina transforms that light into the neural signals that are sent to the brain.

sensation: the processes through which we translate physical energy from the world into neural signals and send the signals to the brain for further processing

perception: the processes through which we interpret or recognize neural signals from sensation

The Eye is a Bit Like a Camera

In the case of vision, the physical energy that our sensory system translates into neural signals is light. What we call “light” is simply a specific type of electromagnetic radiation, energy that spreads out in waves as it travels. Other types of electromagnetic radiation include radio waves, microwaves, x-rays, and gamma rays (the highest energy rays; they are what you would use if you were trying to create the Incredible Hulk). The types of electromagnetic radiation differ in the amount of energy that they have, which can be expressed by the wavelength, or the distance between peaks of the waves. Visible light (for humans) is electromagnetic radiation with wavelengths from 350 to 700 nanometers (a nanometer is one billionth of a meter), which is actually only a very small portion of the total range of electromagnetic radiation.

Visual sensation and perception are a very active set of mental processes, but they begin somewhat passively, with the projection of light onto a surface at the back of the eye. As you may know, there is light all around us. As light bounces off of objects in the world, it enters the eye and is focused onto the surface at the back. As you will see, different properties of that light are translated into neural signals that lead to the sensation of the visual properties of the objects, such as color and brightness.

In some ways, the eye is like a camera. Both camera and eye have a hole that lets light in, a lens that focuses the light, and a surface onto which the light is projected. The outer surface of the eyeball is called the cornea; it is like a transparent lens cap with an added function. It protects the eye, like a lens cap, but it also begins bending the light rays so that they can be focused.

When you look at an eye, you can see a white part, a colored part, and a black part. The white part is called the sclera; the colored part, the iris; and the black part, the pupil. The iris and pupil are the important parts for the eye’s function as a light-collecting device. The iris is a muscle that controls the amount of light that enters the eye by expanding or contracting the size of the hole in the center. The pupil is nothing more than a hole that allows light to get inside the eye. In bright light, the pupil remains rather small. In dim light, the pupil opens wide to allow as much of the available light as possible to enter. (The camera controls the amount of light by varying the size of and the length of time its hole, called the aperture, stays open.)

Directly behind the pupil is the lens which, like all lenses, bends the light rays. The result of the bending is that the light is focused onto the surface at the back of the eye, called the retina. The lens is able to get the focused light to land precisely on the retina by changing its shape, a process called accommodation. (The camera’s lens focuses the light correctly by moving forward and backward.)

So ends the relatively passive part of the process. From here on, vision involves a great deal of brain work, beginning with the way the retina turns the light focused on it into neural signals and sends them to the brain. You will notice that our camera analogy begins to break down at this point. For example, one key difference between vision and camera is that vision takes shortcuts. One key shortcut is similar to the process of interpolation used by some digital cameras to increase their resolution, or the “effective mega-pixels.” Here is how the camera works: pixels are separate, or discrete, areas of light that are projected onto a light sensor in the camera. The more pixels the camera can squeeze into a given space, the better the picture quality. Interpolation— essentially, making a guess about what color should fill in the sections that are missing (that is, the spaces between the pixels)—can increase the effective resolution of a camera. For example, if two adjacent pixels are sky-blue, the area in between them is probably sky-blue, as well. This is similar to what the eyes do, or more precisely, what the brain does with the input from the eyes. So our new analogy is that vision is like a cheap digital camera that improves its picture quality through interpolation.

Our camera-vision analogy really begins to fail, however, when we consider what happens after the light hits the retina. With a camera, the reproduction of the visual scene on the film is close to the end of the process. With human vision, it is barely the beginning. The process seems simple; we look at some object and we see it. As we have hinted, however, recognizing or interpreting visual information (i.e., visual perception) is extraordinarily complex. Let’s look at some of the important parts of the process.

cornea: the transparent outer surface of the eyeball; it protects the eye and begins focusing light rays

sclera: the white part of the eye

iris: a muscle that controls the amount of light entering the eye by expanding or contracting the size of the pupil

pupil: the hole in the center of the eye that allows light to enter and reach the retina

lens: located right behind the pupil, it focuses light to land on the retina

accommodation: the process through which the lens changes its shape to focus light onto the retina

retina: the surface at the back of the eye; it contains the light receptors, rods and cones

The Role of the Retina

There are three layers of cells in the retina. At the very back are the light receptors, neurons that react to light. The second layer is composed of special neurons called bipolar cells, and the top layer, on the surface of the retina, contains neurons called ganglion cells. As you probably noticed from this brief description, light must pass through the ganglion and bipolar cells, which are transparent, before reaching the light receptors. A few details about how these three layers of cells work will help you understand a great deal about how visual sensation works.

When light hits the light receptors, a chemical reaction begins. This chemical reaction starts the process of neural signaling (action potentials and neurotransmission that you learned about in Module 11). This translation of physical energy (in this case, light) into neural signals is called transduction. Two types of light receptors, rods and cones, named for their approximate shapes, are involved in this process for vision. Cones, located mostly in the center of the retina, are responsible for our vision of fine details, called acuity, and our color vision. The rods, located mostly outside of the center of the retina, are very sensitive in dim light, so they are responsible for much of our night vision. The rods are also very sensitive to motion (but not detail), something you have probably experienced many times when you can see something moving out of the corner of your eye but cannot make out what it is.

The relationship between the receptors, on the one hand, and the ganglion and bipolar cells, on the other, explains some of these differences between rods and cones. The rods and cones send neural signals to the bipolar cells, which send neural signals to the ganglion cells, which send neural signals to the brain. The way that the rods and cones are connected to the bipolar cells is the important property to understand.

Let’s start with the rods. Multiple rods connect to a single bipolar cell, which is connected to a ganglion cell. Thus, when the ganglion cell sends a signal to the brain, it could have come from one of several different rods. The brain will be sent information that one of the rods has been stimulated by light, but not which one. Precision, or vision of details, is low, but sensitivity is high; this sensitivity is what makes rods able to see in dim light.

In contrast, many cones, especially those in the center of the retina in an area called the fovea, are each connected to a single bipolar cell, each of which is connected to a single ganglion cell. Thus, cones have a direct line to the brain, which allows for very precise information to be sent, resulting in good sensitivity to detail. The light must hit the cone exactly, however, in order for the signal to be sent.

The signals from the rods and cones, once sent to the ganglion cells, are routed to the brain through the axons of the ganglion cells. The axons are bundled together as the optic nerve and leave the eye through a single area on the back of the retina. Because there are no rods and cones in this section of the retina, we have a blind spot.

To experience your blind spot: Cover your right eye and look at the sun. Hold the screen or page about a foot away and adjust the distance slightly until the moon disappears.

visual acuity: our ability to see fine details

light receptors: neurons at the back of the eye that react to light; there are two kinds: rods and cones

cones: light receptors located mostly in the center of the retina; they are responsible for color vision and visual acuity

rods: light receptors located mostly outside the center of the retina; they are responsible for night vision

fovea: the area in the center of the retina (with many cones); it is the area with the best visual acuity

optic nerve: the area of the retina where the neural signals leave the eye and are sent to the brain

transduction: the translation of physical energy into neural signals in sensation

Seeing Color, Brightness, and Features

In order to recreate the outside world as sensations in the brain, separate parts of the visual system process different aspects of the input to represent the visual properties of the scene. Three of the most important visual properties are color, brightness, and features, so it is worth spending some time describing how our visual system processes them.

Color Vision

The other main job of the cones is to provide color vision. Visible light differs in intensity and wavelength (from 350 to 700 nanometers). Changes in intensity correspond to changes in the sensation of brightness, and changes in wavelength correspond to our sensation of different colors. When light hits an object, much of it is absorbed by the surface of the object. Specific wavelengths of light are reflected off of the object, though. It is the processing of these wavelengths of visible light by our visual systems that gives rise to the sensation of different colors. Color, then, is not a property of an object, or even a property of the light itself.

There are three types of cones, each especially sensitive to a different wavelength of light. The rate at which each of these three cones fire gives rise to the sensation of different colors. This idea is known as the Young-Helmholtz trichromatic theory. According to the trichromatic theory, we have cones that are sensitive to long, medium, and short-wavelength light. We see long-wavelength light as red, medium as green, and short as blue, so the different cones are sometimes referred to as red, green, and blue cones. The cones fire in response to a wide range of light wavelengths, but they are most sensitive to a specific wavelength. It is the relative rates of firing of the three types of cones that give rise to the sensation of different colors. For example, if the long-wavelength cones are firing a great deal, and the medium and short ones are firing little, we will see the color red. You probably noticed that there is no cone for yellow. If the long and medium wavelength cones are firing a great deal, and the short ones firing little, we will see the color yellow. In a similar way, we see all of the different colors by the amount of firing of the three different kinds of cones.

The trichromatic theory was a great idea; it dates back to the early 1800s, and there are very few theories from that long ago that are still accepted by the field. It is not a complete theory of color vision, however. It is better to think of it as the first step in color processing. The theory is not able to explain a couple of interesting observations about our color vision. First, people can easily describe many colors as mixtures of other colors. For example, most people can see orange as a yellowish-red (or a reddish-yellow) and purple as a reddish-blue. Some combinations of colors are never reported, however. Specifically, there are no colors that we see as reddish-green or blueish-yellow. There is simply something incompatible about these two pairs of colors. A second observation consistent with the idea that red-green and yellow-blue are kind of opposites, is the experience of color afterimages. For example, if you stare at a yellow object for about a minute and then look at a blank white space, you will see a ghostly blue afterimage of the object for a few seconds. Here, try this boring demonstration of an afterimage until we come up with a more interesting one:

These additional observations can be explained by the opponent process theory, first proposed as an alternative to the Young-Helmholtz trichromatic theory by Ewald Hering in the 1800s. We have red-green and blue-yellow opponent process ganglion cells in our retina. One opponent process cell is excited by red and inhibited by green light; there is also the reverse version, excited by green and inhibited by red. The other type is excited by blue and inhibited by yellow, along with the reverse, excited by yellow and inhibited by blue. Color information in later processing areas of the brain, such as the thalamus is also handled by opponent processing cells. Opponent process cells take over after the cones; thus, they handle later stages of color vision. Although trichromatic and opponent processing theories were originally in competition as explanations of color vision, most psychologists now think of them as complementary. The three types of cones provide the first level of color analysis, and the opponent process cells take over and handle the later processing in the ganglion cells and the brain.

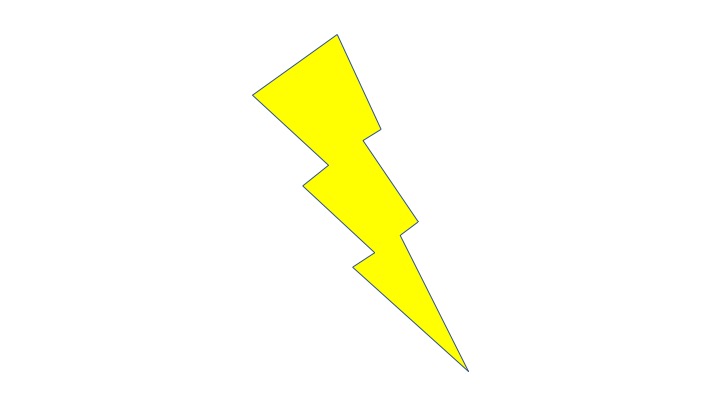

Brightness Vision

Color is such an obvious property of the visual world that you may be tempted to think that it is the most important aspect for visual sensation. It probably is not; it is more likely that brightness is. More precisely, it is probably contrast, or areas where light and dark come together, that is the key property. Why? It is because areas of contrast often mark the separation of objects; an area of contrast is often an edge of an object. Brightness contrast, then, allows us to see an object’s shape, an extremely useful piece of information for its eventual recognition.

Our visual system is constructed to be very sensitive to, even to enhance contrast. The enhancement occurs through a process called lateral inhibition. Bipolar cells have inhibitory connections to each other. When one fires because it is stimulated by bright light, it inhibits its neighboring bipolar cells from firing. If those neighboring cells are not also being stimulated by bright light (which is what would happen in a contrast area), the result is a very low rate of firing. The result is an enhancement of the contrast, as the dark area looks darker. The flip side happens in the bright area too; because of reduced lateral inhibition from the neighboring dark areas, bright areas look brighter.

More generally, you could say that the absolute brightness of some aspect of a visual scene is of little importance. It is the brightness of some area in comparison to a nearby area that is important. Something that looks dark when surrounded by lighter sections may look light when surrounded by darker ones.

Detecting Features

Eventually, visual sensation allows us to end up with a meaningful perception, or recognition of scene. A key sensory process that allows us to build up to these final perceptions is the detection of specific features. Specialized neurons in our visual cortex fire rapidly when they are stimulated by input corresponding to specific features (Hubel & Wiesel, 1959; 1998). For example, if you are looking at a vertical line, the neural signals that result from that input will cause vertical line feature detectors to fire in your visual cortex. If you are looking at a different feature—for example, a diagonal or horizontal line—the vertical line feature detectors will fire little or not at all. These features are very simple and very numerous. Each is detected by a specific kind of neural feature detector.

Some feature detectors in our brains lie in wait for features in specific locations, for specific features anywhere in our visual field, and for features in limited areas of our visual field. As the neural signals corresponding to the simple features travel throughout the visual processing system, they are sent to detectors for more complex features that result from the combination of specific features, such as angles or corners (Hegde & Van Essen, 2000). Then, these more complex features are passed on to other processing sites that have feature detectors for more complex, and specific, features. For example, there are cells in the temporal lobes that fire in response to very specific shapes characteristic of particular scenes, others that respond to familiar objects, and still others that probably respond to human faces (Allison et al., 1999; Bruce et al., 1981; Tanaka, 1996; Vogels et al., 2001).

Debrief

- Which of the three main visual processes outlined in the module (color vision, brightness vision, and feature detection) do you wish your visual system did better? Why?

- If you were offered the opportunity to increase the number of cones in your retina, but the only way to do it was to give up some rods, would you make the trade? Why or why not?

12.2 How the Outside World Gets into the Brain: The Other Senses

Activate

- Which sense do you think is the most important one? Why did you pick the one that you did?

- If you were forced to choose, which sense would you give up? Why did you pick the one that you did?

It hardly seems fair. Vision gets an entire section devoted to it, while the rest of the senses all have to share one section. Why is there such a disparity? One reason is that psychologists know a great deal more about vision than about the other senses. There is simply more to say. At the same time, it would be difficult to argue against the assertion that vision is our most important sense. For a human species that developed as hunter-gatherers, the visual properties of the world would seem the most useful for finding food and avoiding danger. Also, a far greater proportion of brain mass is devoted to vision than to the other senses. Finally, there are clear parallels between vision and the other senses. We will not need to describe some aspects of the other senses in as much detail because you will be able to recognize them from the corresponding processes in vision.

Our main goals in this section, then, are to describe some of the unique facts about the other senses, including the specific sensory organs, receptors, and brain areas involved, and to remind you of the similarities between the other senses and vision.

Hearing

The physical energy that our auditory system turns into sounds is vibrations of air molecules that result when some object in the world vibrates. The vibrating object bumps into the air molecules, which radiate from the source in regular pulses in what we commonly call sound waves. The sound waves have two main properties that our sensory system is equipped to discern. Intensity, or the size of the air movement, is what we end up hearing as loudness, and frequency, or the speed of the pulses, is what we hear as pitch. Right away, you should recognize these two properties as analogous to intensity and wavelength of light for vision.

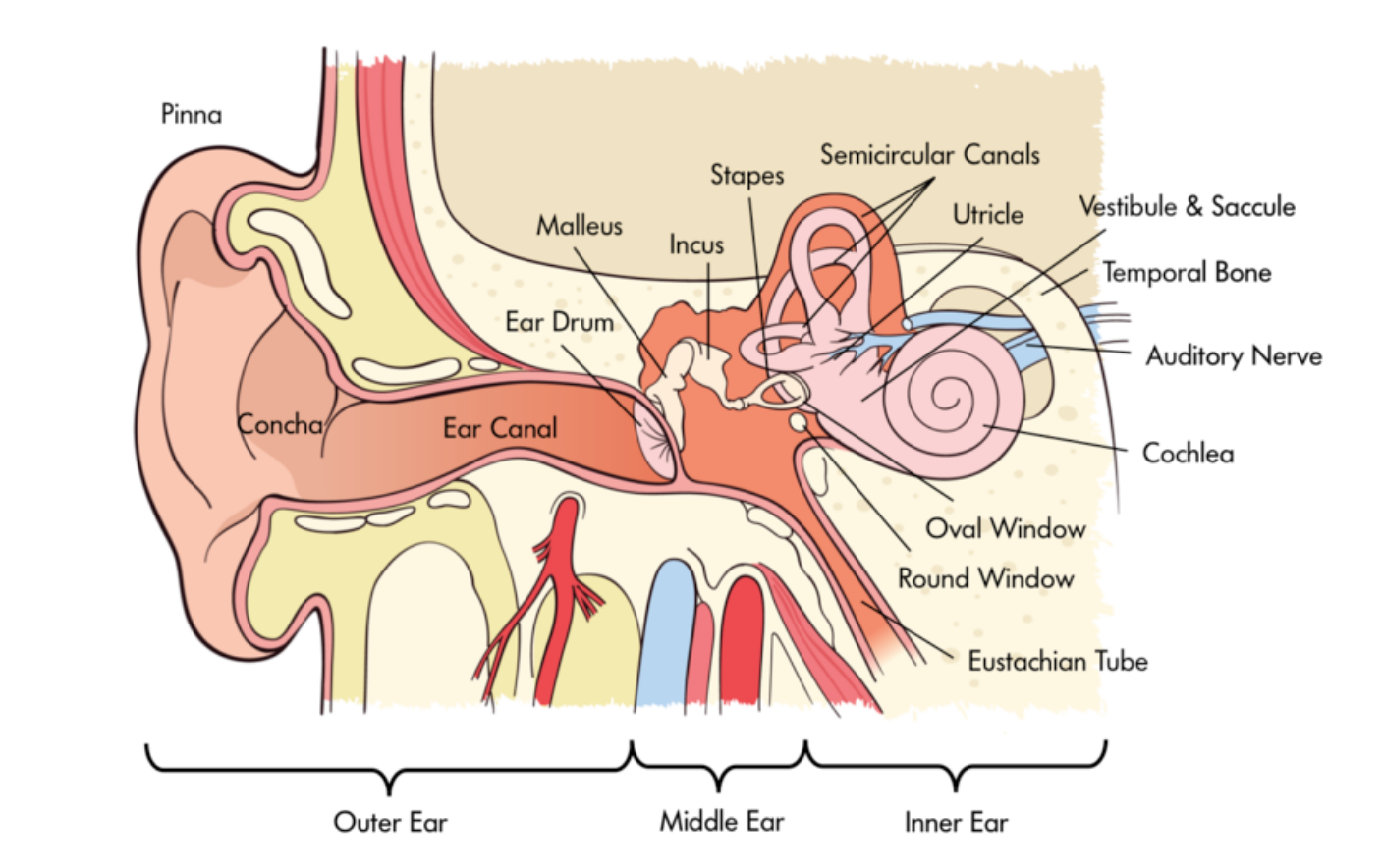

Our ears turn sound waves into bone vibrations, which are then translated into neural signals for further auditory processing. The sensory organs, of course, are the ears. The ear is divided into three main parts, the outer, middle, and inner ear. The outer ear collects the sound waves from the outside world, the middle ear changes them to bone vibrations, and the inner ear generates the neural signals. The outer ear consists primarily of the pinna, the semi-soft, cartilage-filled structure that we commonly refer to as “the ear.” In other words, it is the part of the ear that you can see. It is shaped somewhat like a funnel, and its main functions are to focus surrounding sound waves into the small areas of the middle ear (much like the lens does for vision) and to help us locate the source of sounds.

The middle ear consists of three bones sandwiched between two surfaces called the tympanic membrane and the oval window. This is the area where the sound waves are translated into bone vibrations. Specifically, inside our ear canal, we have a tympanic membrane, what you probably know as the eardrum. This membrane vibrates at the same rate as the air molecules hitting it. On its other side, the tympanic membrane is connected to three bones, called the hammer (malleus), anvil (incus), and stirrup (stapes) (only the stirrup really looks like its name; you really have to use your imagination for the other two). The bones, too, vibrate in concert with the air molecules. The stirrup is connected to the oval window, which passes the vibrations on to the inner ear. The main part of the inner ear is a fluid-filled, curled tube called the cochlea. This is where the vibrations get translated to neural signals, so the cochlea is the ear’s version of the retina.

The auditory receptors inside the cochlea are called hair cells; they are located on the basilar membrane running through the cochlea. It is the movement of the hair cells that generates the action potentials that are sent to the rest of the brain. Two separate characteristics of the hair cell vibrations are responsible for our sensation of different pitches. The first one is the frequency of the hair cell vibrations. According to frequency theory, the hair cells vibrate and produce action potentials at the same rate as the sound wave frequency. The principle is complicated slightly by the fact that many sound wave frequencies are higher than the maximum rate at which a neuron can fire. To compensate, the neurons use the volley principle, through which groups of neurons fire together and their action potentials are treated as if they had been generated by a single neuron.

The second characteristic of the hair cell vibrations is their location along the cochlea. According to place theory, high frequency sound waves lead to stronger vibrations in the section of the cochlea nearer to the oval window, while lower frequency waves lead to stronger vibrations in the farther out sections. Together, frequency theory and place theory do a better job explaining pitch perception than either one can alone. The frequency of hair cell vibrations and action potentials may be a more important determinant of pitch for low and medium frequency sounds, and the location of the hair cell vibrations may be a better determinant for medium and high frequency sounds. Because both frequency and location are used for medium frequency sounds, our pitch sensation is better for these than for high and low ones (Wever, 1970).

pinna: the semi-soft, cartilage-filled structure that is part of the outer ear

tympanic membrane: the eardrum; it vibrates at the same rate as air molecules hitting it, which begins the process of translating the energy into neural signals for sounds

hammer, anvil, and stirrup: the three bones that are connected to the tympanic membrane; they transmit vibrations from the tympanic membrane to the inner ear

oval window: the area connected to the hammer, anvil, and stirrup; it passes vibrations on to the inner ear

cochlea: a fluid-filled tube that contains hair cells, the auditory receptors

hair cells: the auditory receptors; they vibrate when stimulation from the oval window reaches them

frequency theory: a theory that states that pitch is sensed from the vibration of hair cells in the cochlea; higher frequency sound waves cause vaster vibrations, which are heard as higher pitches

place theory: a theory that states that high frequency sound waves lead to stronger vibrations in the section of the cochlea nearer to the oval window, while lower frequency waves lead to stronger vibrations in the farther out sections

Taste and Smell

You may recall learning at some point that taste and smell are related to each other. Indeed, they are our two chemical senses, which translate chemical differences between substances into the experiences of different odors and tastes. The obvious biological benefit of the sense of taste is to help animals to distinguish between food and poisonous substances, nearly all of which are bitter. Have you ever noticed that food often does not taste as good when you are not hungry, though? A second benefit of taste seems to be to discourage us from eating too much of any one food (Scott, 1990).

The taste receptors on the tongue are actually many different types; each responds to a different taste, such as sweet, sour, salty, and many types of bitter (Adler et al., 2000; Lindemann, 1996). Two recently discovered taste receptors are umami and fat (Chaudhari et al., 2000; Gilbertson, 1998; Nelson et al., 2002). Umami is a Japanese word, as there is no corresponding English. It is a taste that is sometimes referred to as savory, the taste characteristic that is common to meats and cheese, for example. It comes from the chemical glutamate, which occurs naturally in some foods, and has been put into spice form as monosodium glutamate. The taste receptors are located on taste buds, which are distributed throughout the tongue on tiny bumps called papillae. Each taste bud has a variety of receptors on it, so we have the ability to detect different tastes throughout the tongue, contrary to the common belief that we have taste buds for specific tastes in specific locations. People differ from each other in the number of different receptors, and because we continually replace taste receptors, we may have different compositions of receptors ourselves over time.

Each receptor type responds to a different chemical property, and different taste receptors have different ways of translating the chemical signals into neural signals (Gilbertson et al., 2000). For example, salty taste can result from the movement of sodium ions in taste receptors, and sweet taste can result from hydrogen ions. Both mechanisms lead to a depolarization of taste receptors, which begins the neural signal. Taste information in the brain is routed through the medulla and thalamus, and finally to the cortex and amygdala for final processing.

Our sense of smell, or olfaction, is closely related to taste. One way you can see this is to notice how taste relies on our ability to smell. As many people have noticed, foods do not taste the same when you have a cold. If you do not allow people to smell, very few can even identify such distinctive tastes as coffee, chocolate, and garlic (Mozel, et al., 1969). The biological benefit of odor is clearly similar to that of taste, as well. It acts as another safeguard against eating dangerous substances. For example, few people will ever get spoiled food into their mouths if they can smell that it is rotten first. It may also be that olfaction complements our taste warning system. Taste sensations arise when chemicals dissolve in water (contained in saliva, of course). Odor, on the other hand, is most pronounced in substances that do not dissolve well in water (Greenberg, 1981).

Olfaction receptors are located in the back of the nasal passages, deep behind the nose. There are about 1,000 different types of smell receptors, and about 6 million total receptors (Doty; 2001; Ebrahimi & Chess, 1998). These receptors send neural signals to the olfactory bulb, a brain area directly above the receptors, just below the frontal lobe of the cortex. Similar to what we saw for taste, the olfactory bulb sends neural signals on to the cortex and amygdala.

olfaction: our sense of smell

olfactory bulb: a brain area directly above olfaction receptors responsible for processing smells

Touch

The sense that we think of as touch actually consists of separate sensing abilities, such as pressure, temperature, and pain. Separate receptors located throughout the skin that covers our bodies respond to temperature, pressure, stretching, and some chemicals. The chemicals can come from outside the body, or they can be produced by the body as in an allergic reaction. Neural signals from nearby touch receptors gather together and enter the spinal cord at various locations. These signals travel to the primary sensory cortex in the parietal lobes via a route through the thalamus.

Pain is particularly important because it is a warning sensation. Simply put, pain is an indication that something is wrong, such as illness or injury. Pain leads us to stop using an injured body part and to rest when injured or ill, so that the body can heal. Animals, including humans, can quickly learn responses that allow us to stop or avoid pain. In other words, we can easily learn to avoid things that can harm us.

Pain receptors are a specific class of touch receptors called nociceptors that are located throughout the body. Nociceptors respond to stimuli that can damage the body, such as intense pressure or some chemical. For example, the pain we feel from inflammation is the result of the hormone (a chemical) histamine stimulating nociceptors after its release at the inflammation site. Some are located deep within the skin, and others are wrapped in a myelin-like shell, so the pain receptors respond only to extreme stimuli (Perl, 1984). Two different kinds of nerve fibers hold pain receptors; one produces sharp, immediate pain and the other produces a slower, dull pain (Willis, 1985). Pain signals are processed in the brain by the cortex, thalamus, and probably other brain areas as well (Coghill et al., 1994).

Balance

The final sense that we will consider is a little different from the first five. At first, our sense of balance does not really seem to be about getting the outside world into our heads, but rather about our place in the outside world. It is more similar to the other senses than it appears at first, however. Similar to the other senses, our sense of balance comes from our nervous system’s ability to translate aspects of the outside world into neural signals. One key difference is that balance does not typically undergo further processing that leads to a conscious perception in the way that looking at a chair or tasting an ice cream cone does.

To get you thinking about how our sense of balance works, try this. Stand up and balance on one foot; you will probably find it very easy. Now try it with your eyes closed. If you have never tried this before, we suggest you do it far away from sharp corners because it is much harder than with your eyes open. Although you can definitely learn to balance without visual feedback, our sense of balance ordinarily comes from the integration of information from vision, proprioception, and the vestibular system.

Balance again on one foot with your eyes closed, this time with your shoes off if possible. This time, pay close attention to what your balancing foot and lower leg are doing. Even if your body is immobile, the muscles are hard at work while you are balancing. Our proprioception system is a key component of this ability. Proprioceptors are receptors throughout the body that keep track of the body’s position and movement. Neural signals are sent from the proprioceptors to the spinal cord, which sends back messages that adjust the muscles. So, ordinarily, the tiny muscle adjustments that you make, such as when you step off of a curb onto the lower street, are outside of conscious awareness. Of course, you can be aware of these adjustments if you attend to them, so there is also neural communication between proprioceptors and the brain.

The vestibular system is a bit like a specialized proprioceptor that applies to the position of the head. Five interrelated parts located in the inner ear—two otolith organs and three semicircular canals, sense tilting and acceleration of the head in different directions. The vestibular system sends neural signals to the brainstem, cerebellum, and cortex (Correia & Guedry, 1978).

proprioception: a system with receptors throughout the body that keep track of the body’s position and movement

otolith organs and semicircular canals: structures in the inner ear that sense tiling and acceleration of the head in different direction

Debrief

- Answer the questions from the Activate for this section again (Which sense is the most important, and which sense would you give up)? Were any of your answers or reasons different after reading the section?

- Draw as many of the parallels between different sensory modalities as you can. This will help you organize them, so you can keep track of them and remember them.

12.3. Sensory Thresholds

Activate

- Are you good at detecting faint stimuli (e.g., a dim light in the dark or a quiet sound in a silent room)?

- Are you good at detecting differences between similar stimuli, such as the weights of two objects, or the loudness of two sounds?

- Which sensory mode is your most sensitive?

In section 12.1, we cautioned against carrying the camera-eye analogy too far. By now it should be clear that even the straightforward parts of our sensory systems do not simply create a copy of the outside world in the brain. From lateral inhibition in vision to the differences in taste receptors across people, there is ample evidence that the information that comes from the sensory system is not a recording. Now it is time to look ahead and consider how we begin to use our sensory information. It will become clearer and clearer from this section and Module 13 on perception that sensation and perception are extremely active, fluid, and constructive processes.

Although the goal of our sensory systems is to get a neural representation of the outside world into the head, that does not mean that we need a perfect copy of it in there. Creating a perfect copy of the world in the brain would require much more mental work than we can spare. Really, what we need our sensory systems to do is to give us enough information about the surrounding world to survive in it. Sometimes, as in the case of blocking out pain when you are concentrating, survival might depend on your ability to not sense something (see Module 13). For example, if a hungry lion were chasing you, it would be helpful not to notice how much your hamstring hurts. Other times, an efficient sensory system requires making guesses about what is out there from very little evidence.

Absolute Thresholds

The task of detecting whether or not a stimulus, any stimulus, is present is one of the most fundamental jobs of our sensory system. After all, in order to do any further sensory and perceptual processing, you have to know that something is there. Even in the case of detection, however, you will see that it is not simply a matter of turning on the recorder.

It is possible to measure the absolute sensitivity of our sensory systems, but as you will see, our actual sensitivity in any given situation can vary considerably from that. This absolute sensitivity is called the absolute threshold, the minimum amount of energy that can be detected in ideal conditions, for example, in vision or hearing in a completely dark or quiet room with no distractions. The different sensory modes, then, have their own absolute thresholds and they are very impressive. Human beings can see a candle flame from 30 miles away on a dark night, hear a watch ticking from 20 feet in a quiet room, smell one drop of perfume in a three-room apartment, taste one teaspoon of sugar in two gallons of water, and feel the touch of a bee’s wing falling on the face from a height of one centimeter (Galanter, 1962).

Of course, there are differences across people. Your absolute threshold for vision might be better than your 81-year old grandfather’s, for example. Perhaps more importantly, or at least more interestingly, there are differences within people. Basically, your own absolute threshold can be very different at different times. It is a very simple idea. Your absolute threshold can change dramatically, depending on factors such as motivation and fatigue. For example, if you are being paid $5 to sit in a dark room for five hours during a psychology experiment and press a button every time you see a dim light, you will probably miss a few. Especially as the session wears on, your motivation may be low, and fatigue will be high, leading to a relatively high absolute threshold (in other words, a relatively bright light will be required for you to detect it). On the other hand, if you are a guard watching for an approaching enemy and are supposed to report every time you see a dim light on a radar screen, you are likely to see every possible light.

The relationships between threshold and personal factors have been expressed mathematically by signal detection theory (Tanner & Swets, 1954; Macmillan & Creelman, 1991). According to signal detection theory, there are two ways to influence your absolute threshold. First, you can increase your sensitivity, something that is possible only through some kind of enhancement (such as eyeglasses, or night vision goggles). The other way is to change your strategy for reporting the detection of a signal. This is the part that varies with factors like motivation and fatigue. If you are very motivated to see a dim light, your strategy may be to say that you see one whenever there is the slightest bit of evidence, so you will be sure to see all of the lights. Because you will be saying “there it is,” so many times, however, you will also have a lot of false alarms, reporting a light when none is there. If you later want to reduce your false alarms, perhaps because you have been “crying wolf” too many times, you can change your strategy again, requiring a brighter light before you report that you see it. Of course, now you will increase the number of times that you miss a dim light that is really there. This relationship between hits, misses, and false alarms is the important lesson to be gained from signal detection theory. If you cannot increase your sensitivity, there will always be this type of relationship. If you get a lot of hits, you will also get a lot of false alarms; if you get few false alarms, you will get a lot of misses.

If you think about it, there are a number of situations in which an observer is asked to detect a faint stimulus, and thus, several real-life applications of signal detection theory. For example, a friend of ours once spent a week with their hand in a cast because the doctor examining their x-ray detected a hairline fracture that was not there. Because the doctor did not want to miss a broken bone, they adopted a strategy that increased their likelihood of getting a hit, but in this case, they got a false alarm. As another example, imagine a teenager trying to sneak in silently after missing curfew. They freeze on the stairs when they hear the slightest creak of a floorboard, sure that their mother has heard them and is getting out of bed. Another false alarm resulting from a high motivation to detect a stimulus.

absolute threshold: the minimum amount of stimulus energy that can be detected in ideal conditions

signal detection theory: a mathematical model that describes the relationship between sensory thresholds and personal factors, such as motivation and fatigue

Difference Thresholds

A second fundamental use of sensory information is detecting differences. One of our favorite key terms is the alternative name for difference threshold because it may be the most self-explanatory term in all of psychology; the term is just noticeable difference (JND). It is, of course, the smallest difference between two stimuli that can be detected. Although the principles from signal detection theory can be applied to detecting differences, there is a second important way that these thresholds vary.

The notable fact about the just noticeable difference, is that it is not a constant. For example, suppose you are holding a pebble in one hand; you may be able to detect the difference in weight if we add another pebble. In other words, the second pebble is more than a JND. What if you were holding a bowling ball in your hand, though? If we added a pebble now, you would not notice the difference; now it is less than a JND.

Over 175 years ago, researchers discovered that the JND was related to the size of the comparison stimulus. If you are looking at a dim light, you can detect a small difference. On the other hand, if you are looking at a bright light, you need a larger difference before you can detect it. This relationship is known as Weber’s Law, and it holds for judgments of brightness, loudness, lifted weights, distance, concentration of salt dissolved in water, as well as many other sensory judgments (Teghtsoonian, 1971).

Again, you do not have to think hard to realize that applications of JND’s and Weber’s Law reach far beyond judging the loudness of tones in a psychology experiment. When one of the authors used to lift weights with a friend in college, we used to joke that adding five pounds to our current bench press weight was like wearing long sleeves; we would not even notice it (of course, if we had been bench pressing 25 pounds, we probably would have noticed it). Or think about how consumer products companies may take advantage of the JND. For example, if a company is going to decrease the size of product (a secret price increase, as they will charge the same price; you may know of this as “shrinkflation”), they will be sure to decrease it by less than a JND. This probably happens much more than you think, precisely because the companies have been successful at staying within the JND (and sometimes because they cheat by keeping the package size the same, reducing only the contents).

difference threshold (just noticeable difference, or JND): the smallest difference between two stimuli that can be detected

Weber’s Law: a perceptual law that states that the difference threshold for a stimulus is related to the size of the comparison stimulus

Debrief

- Describe some other examples where signal detection theory would apply.

- Describe some other examples of JND’s and Weber’s Law.