9 Module 9: Cognitive Psychology: The Revolution Goes Mainstream

Remember and Understand

By reading and studying Module 9, you should be able to remember and describe:

- The place of “mental processes” in early psychology

- The banishment of “mental processes” by the behaviorists

- Ivan Pavlov’s work on classical conditioning

- John Watson’s work, especially the Baby Albert demonstration

- Edward Thorndike’s and B.F. Skinner’s extensions of behaviorist psychology

- Shortcomings of behaviorism

- The Cognitive Revolution

- Progress in memory research: Ebbinghaus and Bartlett, early “post-revolution” research, working memory versus short term memory, inferences and memory, explicit and implicit memory

- Cognitive psychology in perspective

Modules 5 through 8 showed you how being knowledgeable about some psychological principles can help you think, remember, reason, solve problems, learn, and take tests more effectively, in school and throughout life. Most of the topics contained in these modules are considered the domain of Cognitive Psychology, the psychology of cognition. The study of cognition is the study of knowledge: what it is, and how people understand, communicate, and use it. Cognition is essentially “everyday thinking.”

In the early days of psychology (the late 1800’s), cognitive (mental) processes were at the forefront of psychological thinking. But by the first half of the 1900’s, psychology—especially in the United States—was dominated by Behaviorism, a view that rejected the idea that internal mental processes are the appropriate subject matter of scientific psychology. Behaviorism itself fell victim to what became known as the Cognitive Revolution in the second half of the 1900’s. Psychology, in a way, had come full circle, as mental processes again became a major area of interest. Today, insights about Cognition have touched all areas of Psychology. In addition, Cognitive Psychologists have collaborated with scientists in other disciplines interested in “intelligent behavior” (for example, computer science and philosophy) to create what Howard Gardner (1985) called, “The Mind’s New Science,” or Cognitive Science.

Psychology as the Study of Mind

Wilhelm Wundt created the first psychological laboratory in 1879 in Germany, although for a number of years before then several scientists had been conducting research that would become part of psychology. Wundt worked very hard for decades to establish psychology as a viable discipline across Europe and the United States. His laboratory trained many of the first generation of psychologists, who began their own research throughout the western world.

Wundt believed that experimental methods could be applied to immediate experience only. Hence, only part of what we now think of as psychology could be a true science. Recall that a science requires objective observation. Wundt believed that mental processes beyond simple sensations—for example, memory and thoughts—were too variable to be observed objectively.

Mind Versus Behavior

The American psychologist William James argued that psychology should include far more than immediate sensations. He greatly expanded psychology into naturally occurring thoughts and feelings. The group of psychologists called the behaviorists, however, moved psychology in the opposite direction by reducing psychology to the study of behavior only. Behaviorism dominated psychology in the United States through most of the first half of the 20th century. Although, as we shall see, the behaviorists’ approach ended up being far too narrow to explain all of human behavior, their contributions were nevertheless extremely important.

Classical Conditioning

The first giant contributor to behaviorist psychology was Ivan Pavlov. Although Pavlov was not a psychologist—he was a Russian physiologist—his research had an enormous impact on the development of psychology as the science of observable behavior. He discovered and developed the basic ideas about classical conditioning, which he believed explained why animals, including humans, respond to their environment in certain ways.

As a physiologist, Pavlov had built quite a reputation studying digestive processes. He had done a great deal of research with dogs, for which he constructed a tube to collect saliva as it was produced in a dog’s mouth. In the course of his research, Pavlov became annoyed by a common occurrence. If you put food into a dog’s mouth, the dog will begin to salivate. After all, salivation is a natural part of the digestive process. The annoying phenomenon that Pavlov noticed (annoying because it interfered with his regular research) is that the dogs under study would begin to salivate before they were given the food, perhaps when the person who fed them walked into the room (Hunt 2007). In 1902, Pavlov realized that this annoyance was worthy of study itself, and thus embarked upon a series of examinations that would span the rest of his career. This research program has left Pavlov one of the most important contributors in the history of psychology.

Pavlov was responsible for the initial investigations of the central concepts in classical conditioning: unconditioned stimulus, unconditioned response, conditioned stimulus, conditioned response (see Module 6). He typically used food, often meat powder, as the unconditioned stimulus and noted how salivation occurred in response, as an unconditioned response. He would present various neutral stimuli (e.g., sounds, sights, touches) along with the Unconditioned Stimulus (the meat powder), turning them into Conditioned Stimuli. Thus these new stimuli acquired the power to cause salivation (now Conditioned Responses) in the absence of food.

It was Pavlov who discovered that the Conditioned Stimulus must come before the Unconditioned Stimulus, that Conditioned Stimuli could be generalized and discriminated, and that extinction of the conditioned response would occur if a Conditioned Stimulus stopped being paired with the Unconditioned Stimulus.

John Watson, an early leader of the behaviorist movement, seized on Pavlov’s findings. He thought—and persuasively argued—that all animal behavior (including human behavior) could be explained using the Stimulus-Response principles discovered by Pavlov. In support of this idea, Watson and Rosalie Rayner provided a dramatic example of classical conditioning in humans, and one of the most famous psychology studies of all time. They were able to classically condition an infant who became known as Baby Albert to fear a white rat. Before conditioning, the rat was a neutral stimulus (or even an attractive stimulus); it certainly elicited no fear response in Albert. Loud noises did, though. The sound of a metal rod being hit with a hammer close behind Albert’s head automatically made him jump and cry. The loud noise, therefore, was an Unconditioned Stimulus; Albert’s automatic fear, an Unconditioned Response. By showing Albert the rat, then banging on the metal rod (i.e., pairing the neutral stimulus with the Unconditioned Stimulus), the rat was easily transformed into a Conditioned Stimulus, eliciting the Conditioned Response of fear (Watson and Rayner 1920). Two pairings of rat with noise were enough to elicit a mild avoidance response. Only five additional pairings led to quite a strong fear response. As Watson and Rayner described it:

The instant the rat was shown the baby began to cry. Almost instantly, he turned sharply to the left, fell over on left side, raised himself on all fours and began to crawl away so rapidly that he was caught with difficulty before reaching the edge of the table.

Albert’s fear was also generalized to a rabbit, a dog, a fur coat, and a Santa Claus mask.

Although this was an important observation of classical conditioning in humans, the study has since been widely criticized. Watson and Rayner apparently did nothing to decondition Albert’s fear (Hunt 2007). Today this neglect would certainly be judged an extreme violation of ethical standards (even if the study itself was judged ethical).

Operant Conditioning

There were those, including Pavlov and Watson, who believed that all human learning and behavior could be explained using the principles of classical conditioning. Certainly, these specific behaviorist principles shed light on important aspects of human and animal behavior. Edward Thorndike, working primarily with cats and chickens, demonstrated that additional principles were needed to explain behavior, however. By observing these animals learning how to find food in a maze (chickens), or escape from a puzzle box (cats), Thorndike was laying the groundwork for the second major part of behaviorist psychology, operant conditioning, which helped to explain how animals learn that a behavior has consequences (see sec 6.2).

Without a doubt, the most famous psychologist who championed operant conditioning was B. F. Skinner. He demonstrated repeatedly that if a consequence is pleasant (a reward), the behavior that preceded it becomes more likely in the future. Conversely, if a consequence is unpleasant (a punishment), the behavior that preceded it becomes less likely in the future. Working mostly with rats and pigeons, Skinner sought to show that all behavior was produced by rewards and punishments.

The Shortcomings of Behaviorism

Together, the concepts of classical and operant conditioning have played an important role in illuminating a lot of human behavior. Further, a number of applied areas have benefited greatly from behaviorism. For example, if fear can be conditioned (as it was in Baby Albert’s case), it can be counter-conditioned. Systematic desensitization, a successful psychological therapy for curing people of phobias, is a straightforward application of classical conditioning principles (see Module 30).

And yet, something was missing from the behaviorist account. Think about the following question: Why do you go to work (assuming you have a job, of course)? A behaviorist explanation is straightforward: you work because the behavior was reinforced (i.e., you worked and were given a reward, money, for doing so). But, think carefully; do you go to work because you were paid for working last week, or because you expect to be paid for working this week? Suppose your boss hands you your next paycheck and tells you that the company can no longer afford to pay you. Will you return to work tomorrow? Many people would not; they would look for a new job. Although the point seems unremarkable, it is profound. There appears not to be a direct link between a behavior and its consequence, as the behaviorists maintained. Rather, a critical mental event (the expectation of future consequences) intervenes.

Even in classical conditioning, some process like expectation was needed to explain a lot of behavior, even in non-human animals. Recall that classical conditioning is essentially learning that the Conditioned Stimulus predicts that the Unconditioned Stimulus is about to occur. For example, a dog learns that the stimulus of its owner getting the leash—the CS—predicts the stimulus of being taken for a walk—the UCS. Robert Rescorla (1969) demonstrated that it is more than simply co-occurrence of Conditioned Stimulus and Unconditioned Stimulus (i.e., the number of times both occur together) that determines whether classical conditioning will occur. Far more important is the likelihood that the Unconditioned Stimulus appears alone, without the Conditioned Stimulus (this is called the “contingency” between UCS and CS). If the UCS appears alone frequently, then the Conditioned Stimulus is not a good predictor, no matter how often the two appear together. Again, a dog will not develop a strong association between its owner getting the leash and being taken for a walk if they frequently get to go for a walk even when the owner does not get the leash. The importance of contingency cannot be explained by a strict behaviorist account. Rescorla’s research showed that some Conditioned Stimuli (those with high contingency) were more informative than others, and thus easier to learn.

The behaviorists also believed that any association could be learned, which turned out to be not quite true. Consider John Watson’s most famous quotation:

Give me a dozen healthy infants, well-formed, and my own specified world to bring them up in and I’ll guarantee to take any one at random and train him to become any type of specialist I might select—doctor, lawyer, artist, merchant-chief and, yes, even beggar-man and thief, regardless of his talents, penchants, tendencies, abilities, vocations, and race of his ancestors. (1924; quoted in R.I. Watson, 1979)

The behaviorists believed that the relationship between stimulus and response or between behavior and consequence was arbitrary. Any stimulus could become a Conditioned Stimulus for any response, and any behavior could be reinforced equally with the same consequence. But a very important experiment by John Garcia and Robert Koelling in 1966 demonstrated that, at least for classical conditioning, this is not so. Garcia and Koelling showed that animals were biologically predisposed to learn certain UCS-CS associations and not others. For example, if food is spiked with some poison that will make a rat sick, the rat will learn to associate the taste (the CS) with the poison (the UCS). The rat will develop a conditioned response to the taste of the food, and will learn to avoid it. A pigeon, on the other hand, will have great difficulty learning this association between taste and poison. If a visual stimulus, such as a light, is used as a CS, however, the pigeon will have no trouble at all learning to associate it with the poison UCS. This time, the rat will have difficulty learning. The associations that are easy for a species to learn are ones that are biologically adaptive. A bird must learn to associate the visual properties of a stimulus with its edibility because the bird needs to see its potential food, sometimes from a long distance away. A rat, in order to learn whether some potential food is edible, will take a small taste. If the substance does not make the rat sick, it must be food. Again, the fact that some associations are easy and others are difficult to learn cannot be explained by a strict behaviorist account.

Earlier challenges to behaviorism had been supplied by a very important study conducted by Edward Tolman and Charles Honzik in 1930. They demonstrated that learning can occur without reinforcement. One group of rats learned to run through a maze using positive reinforcement for each trial, a food reward at the end of the maze. A second group of rats was not reinforced; of course, they wandered aimlessly through the maze and did not learn to run through it rapidly to reach the goal. A third group was not reinforced for most of the trials. Then, suddenly, reinforcement was given for the last two trials. This third group learned to run through the maze nearly as well as the first group, even though they had many fewer reinforced trials. Clearly, these rats had learned something about the maze while wandering during the early non-reinforced trials.

A final shortcoming of the behaviorist view is that it does not acknowledge that learning sometimes occurs without causing an observable change in behavior. Think about learning in school. What if you study a section of a textbook and remember what it says, but are never given a test question about it? Does that mean you did not learn the content of the textbook? Rather than saying that behaviorist explanations shed light on learning, it is probably more correct to say that they help explain performance (Medin, Ross, & Markman, 2001). Although an explanation of performance may be a useful contribution to psychology, it is far less comprehensive than the original goals of behaviorism.

The Cognitive Revolution

While a first wave of research that revealed the shortcomings of behaviorism was being produced in the 1930s and 1940s, other seeds for a revolution were being planted and beginning to grow. As you probably know, the most significant historical event of the 1940s was World War II. The war had a profound impact on all areas of life; science was no exception. Prominent scientists and mathematicians throughout the United States and Europe were recruited to the war effort, lending their expertise to develop computers, break enemy codes, design aircraft controls, design weapons guidance systems, etc. Researchers would observe certain types of behaviors (for example, reaction times or errors) and make inferences about the mental processes underlying the behaviors. At the same time, doctors and physiologists were learning much about the brain’s functioning from examining and rehabilitating soldiers who had suffered brain injuries in the war. These two fields of inquiry—computer science and neuroscience—led to profound observations about the nature of knowledge, information, and human thought (Gardner 1985).

Many of these observations began to be synthesized in the late 1940s. At a scientific meeting in 1948, John von Neumann, a Princeton mathematician, gave a presentation in which he noted some striking similarities between computers and the human nervous system. Von Neumann and others (e.g., psychologist Karl Lashley, mathematician Norbert Wiener; see Gardner 1985) began to push this “the mind is like a computer” idea. The cognitive revolution had begun.

Now that psychologists had a new way of looking inside the head, many observers believed that a psychology of the mind could be scientific after all. Researchers such as Noam Chomsky, George Miller, Herbert Simon, and Allen Newell began the task of creating cognitive psychology. The view inside the head was not direct, of course, but as more and more talented researchers moved into this new psychological domain, behaviorism’s influence became smaller and smaller.

Strides in the Psychological Study of Cognition

If you look at a book that reviews the cognitive aspects of psychology, you will probably find chapters on Perception (and Sensation), Learning, Memory, Thinking, Language, and Intelligence, in more or less that order. Why is this? Does this organization make sense? It certainly does to psychologists. One way to understand the organization is to view the topics as going from “basic” to “higher” level. Basic cognitive processes—sensation and perception and, in some ways, learning (although it is more often considered a topic in behaviorist psychology)—are required to “get the outside world into the head,” that is, to create internal (mental) representations of the external world. Higher-level processes use these representations of the world to construct more complex “mental events.” For example, you might think of some kinds of memory as a stored grouping of perceptions. Then, you can consider reasoning (a sub-topic within the topic of thinking) as computing with or manipulating sets of facts and episodes (i.e., memories).

The cognitive revolution has propelled a vigorously scientific process of discovery that illuminates learning, memory, thinking, language, intelligence, problem solving, as well as other cognitive processes. The results of that scientific process have led to psychologists’ current beliefs about cognition and form the basis for many of today’s most exciting avenues of psychological inquiry.

Memory – A Cognition “Case Study”

Memory may be the quintessential topic of the cognitive revolution. Nearly absent during the behaviorism days, memory research has been very well represented since then. Around the same time that Wundt was examining the components of sensation, Hermann Ebbinghaus began the systematic study of memory by constructing over 2000 meaningless letter strings and memorizing them under different conditions. Ebbinghaus demonstrated that repetition of material led to better memory (1913; quoted in R.I. Watson 1979). He also examined the effects on memory of such factors as length of list, number of repetitions, and time (Watson, 1979). For example, he discovered that 24 hours after learning a list, only one-third of the items were still remembered. His findings and methods were extremely influential, and they are apparently the basis of several commonly held beliefs about memory—principally that the best way to memorize something is to repeat it.

Importantly, Ebbinghaus believed that it was necessary to remove all meaning from the to-be-remembered material in order to examine pure memory, uncontaminated by prior associations we might have with the material. The problem with his approach, however, is that memory appears to almost never work that way (or if it does, it certainly does not work very well). For example, Ebbinghaus used “nonsense syllables” such as bef, rak, and fim in his research. Well, when we look at these syllables, we automatically think beef, rack, and film. These syllables are not so meaningless after all; they have automatic associations for us. If asked to remember them, we would make use of these associations. That is the way memory typically works.

There was little memory research during the behaviorist era. One striking exception is the work by Frederic Bartlett in the 1930’s. Bartlett found that meaning is central to memory. New material to be remembered is incorporated into, or even changed to fit, a person’s existing knowledge. Bartlett read a folk tale from an unfamiliar culture to his research participants and asked them to recall the story. The original tale is very odd to someone from a western culture, and it is difficult to understand. What Bartlett found is that over time, people’s memory for the tale lost many of its original non-western idiosyncrasies and began to resemble more typical western stories. In short, Bartlett’s participants were changing their memory of the tale to fit their particular view of the world. It turns out that Frederic Bartlett was about forty years ahead of his time. It was not until the 1970s that researchers began to think about the fluid and constructive nature of memory in earnest.

Instead, the early memory researchers focused on trying to figure out the different memory systems and describing their properties. The first “Cognitive Revolutionary” memory research took the form of an essay written by George Miller in 1956. His essay, “The magical number 7, plus or minus 2” has become one of the most famous papers in the history of psychology. The essay described Miller’s observation that the number 7 seemed to have a special significance for human cognitive abilities. For instance, the number of pieces of information that a person can hold in memory briefly (in short-term memory, what researchers then called working memory) falls in the range of 5 to 9 (7 plus or minus 2) for nearly all people. Miller noted, however, that short-term memory capacity could be increased dramatically by using the process of chunking, grouping information together into larger bundles of meaningful information. For example, if you think of a number series as a set of three-digit numbers (rather than isolated digits), you would probably be able to remember 7 three-digit numbers, or 21 total digits.

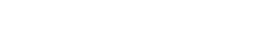

Research in the 1960s was dominated by the information processing approach. As in computer scientists’ flow-charting, in which systems and processes are drawn as boxes and arrows, the information-processing approach depicts the way information flows through the system. The most influential of the information processing descriptions of memory was developed by Richard Atkinson and Richard Shiffrin (1968). Atkinson and Shiffrin described memory as consisting of three storage systems.

Sensory memory holds information in storage for a very brief period (around one second), just long enough for someone to pay attention to it so it can be passed on to the next system. Short-term memory is a limited capacity (about 7 items, or chunks), short duration system, a temporary storage system for information that is to be transferred into (encoded into) long-term memory. Long-term memory is essentially permanent, essentially unlimited storage. Information is retrieved from Long-term memory back into Short-term memory.

Atkinson and Shiffrin’s description of memory is very close to the one used in section 5.1. The difference is that short-term memory is replaced in the module with working memory. The two concepts are not exactly interchangeable. The theory of working memory emphasizes that information is not simply held, but rather is used during short-term storage (Baddeley and Hitch 1974). So, for example, you might simply try to hold information, such as a telephone number, for a short time until you can get to a phone. Or you might have information in mind because you are using it to solve a problem. The current view of working memory also distinguishes between verbal and visual (or visuospatial) memory, which seems to capture an important distinction (Baddeley, 1996; Jonides et al., 1996; Smith, Jonides, & Koeppe, 1996). Whichever model you use (the original short-term memory, or updated working memory), it is clear that this temporary storage system is both limited in capacity and very temporary. Lloyd Peterson and Margaret Peterson (1959) provided a demonstration of just how temporary short-term memory can be. Their participants were given strings of 3 letters (for example, XPF) and prevented from rehearsing (by forcing them to count backwards by 3’s). Participants sometimes forgot the letters in as little as 3 seconds. By 18 seconds, very few people could remember any of the letter strings.

Researchers in the 1970s began to move away from the boxes and arrows of the information processing approach. They began to think again about the way memory functions in life and thus were picking up the long neglected agenda of Frederic Bartlett.

Craik and Tulving’s levels of processing research and Bransford and Johnson’s “Doing the Laundry” research were important, in part, because they focused on memory not as a static, fixed storage system, but as a dynamic, fluid process. According to the levels of processing view, for example, information might last in memory for a lifetime, not because it was fixed in a long-term memory system, but because it was encoded, or processed, very elaborately. This is important, both to psychologists interested in understanding the nature of memory and to people who might be interested in improving their own memories.

Other researchers picked up on the role of inferences in determining understanding and memory. For example, Rebecca Sulin and James Dooling (1974) demonstrated how such inferences can actually become part of the memory for the story itself (similar to the way Bartlett’s subjects back in the 1930’s changed their memory of the folk tale to be consistent with their views of the world). Half of the participants in their experiment were asked to remember the following paragraph, entitled “Carol Harris’s Need for Professional Help”:

Carol Harris was a problem child from birth. She was wild, stubborn, and violent. By the time Carol turned eight, she was still unmanageable. Her parents were very concerned about her mental health. There was no good institution for her problem in her state. Her parents finally decided to take some action. They hired a private teacher for Carol.

The other half of the participants read the same paragraph, but the name Carol Harris was replaced with Helen Keller. One week later, participants were given a recognition test. One of the test sentences was “She was deaf, dumb, and blind.” Only 5% of the “Carol Harris” participants mistakenly thought this sentence was in the original paragraph, but 50% of the “Helen Keller” participants made this error. Thus, participants made inferences about the story based on their knowledge of Helen Keller; the inferences later became part of the memory of the original story for many participants.

Researchers have also continued to make strides toward describing and distinguishing between different memory systems, one of the early goals of memory research. One distinction that you already saw is between declarative memory (facts and episodes) and procedural memory (skills and procedures). A related distinction is between explicit memory and implicit memory. Explicit memory is memory for which you have intentional or conscious recall. It pertains to most of declarative memory. Explicit memory is what you are using when you say, “I remember…” Implicit memory refers to memory in which conscious recall is not involved, such as remembering how to ride a bicycle. It includes procedural memory, to be sure, but implicit memory can also be demonstrated using declarative memory. Suppose we ask you to memorize a paragraph and it takes you 30 minutes to do it. One year later, we show you the paragraph again and ask you if you still remember it. Not only do you not remember the paragraph, you do not even remember being asked to memorize it one year earlier (in other words, there is no conscious recall or recognition). But if you were to memorize the paragraph again, it would probably take you less time than it did originally, perhaps 20 minutes. This 10-minute “savings in relearning” (Nelson, Fehling, & Moore-Glascock 1979) indicates that you did, at some level, remember the paragraph. This memory without conscious awareness is implicit memory.

There has been a debate among researchers about whether explicit and implicit memory are separate kinds of memory. Many experiments and case studies have been conducted that demonstrate differences (e.g., Jacoby and Dallas 1981, Rajaram & Roediger, 1993). For example, researchers have shown that some patients who suffer from brain injury-induced amnesia do not suffer deficits on implicit memory tasks, despite profound deficits on explicit memory tasks using the same information (e.g., Cohen & Squire 1980; Knowlton, Squire, and Gluck 1994). Critics of this research, however, have suggested that the observed differences may reflect a bias in the way research participants are responding or some other phenomenon (Ratcliff and McCoon 1997; Roediger &McDermott 1993).

More recently, cognitive neuroscience, which combines traditional cognitive psychological research methodology with advanced brain imaging techniques, has begun to shed light on the controversy. It appears that different brain areas are involved in implicit and explicit memory. Specifically, a brain structure known as the hippocampus (along with related structures) is central to the processing of explicit memories (i.e., memories in which conscious awareness is present), whereas it is relatively uninvolved in the processing of implicit memories (i.e., changes in behavior that are not accompanied by conscious awareness) (Schacter, 1998; Clark & Squire 1998) (see sec 9.2). Thus, it appears that cognitive neuroscience has produced good evidence that implicit and explicit memory might be different kinds of memory. Such research promises to clarify a number of other aspects of memory in coming years.

Cognitive Psychology in Perspective

Psychology began as a systematic investigation of the mind, then became the science of behavior, and is currently the science of behavior and mental processes. These changing conceptions illustrate changes in the centrality of cognitive processes in the field of psychology. Cognition has been, in turn, the near complete focus of the field, completely banned from the field, and now, integrated into the field.

Cognitive psychology is a set of inquiries and findings that may seem rather abstract. Basic cognitive processes, such as sensation and perception, function to get the external world into the head. Intermediate level processes, such as memory, create mental representations of the basic perceptions. Higher-level cognitive processes, such as reasoning and problem solving, use the outputs of these basic and intermediate processes. But knowledge of cognitive psychology can benefit you in many ways, as Modules 5 through 8 suggest. If you do follow the prescriptions presented in the modules, you will have greater success in school, to be sure. With more solid thinking skills, however, you will also be equipped to succeed as a learner throughout life, which means that you will succeed throughout life, period.

Please realize that both this applied focus and the more scientific focus are important to you. First, of course, in order to succeed in later psychology classes, many of you will need to know about the traditional, academic approach. More importantly, perhaps, careful attention to the details of psychological principles will help you to apply them to your life more effectively. For example, it is a deep understanding of how memory encoding works, even at the neuron level, that allows you to see why the principles for remembering that are suggested in Module 5 are so effective. Understanding why something works is much more compelling that simply realizing that something works. Similarly, when you understand what deductive reasoning is, why it is important, and why it is so difficult to do correctly; it can help motivate you to learn and practice these skills.

It is reasonable to expect that several trends related to cognitive psychology that have begun over the past decades will continue. First, the integration of a cognitive perspective into other psychology sub-fields will likely continue. As you will see throughout this book, insights about cognition have added to our understanding of psychological disorders, social problems such as aggression and prejudice, and of course education. Second, the merging of neuroscience and cognitive psychology—cognitive neuroscience—will continue. As brain imaging techniques become more accessible, more researchers will turn to them to look for brain activity and the structural underpinnings of cognitive processes. Finally, cognitive psychology will continue its strong interdisciplinary orientation and Cognitive Science will flourish. Researchers and thinkers from fields such as psychology, computer science, philosophy, linguistics, neuroscience, and anthropology all had a hand in the development of cognitive science. From the beginning, then, cognitive psychologists have been talking to and working with researchers in several other disciplines. This trend, too, is likely to continue.

But wait, do researchers still care about Classical and Operant Conditioning?

Very careful readers might have noticed some important details in the section above where we began to address the limitations of the behaviorist view. First, although the research revealed limitations of these approaches, Rescorla, and Garcia and Koelling were conducting their research on the topics of classical and operant conditioning (in other words, they were not working against classical and operant conditioning, they were working for the topics). And you might have noticed the dates: 1969 for Rescorla and 1966 for Garcia and Koelling, well after the 1956 Cognitive Revolution that we told you about. It is important that you realize that the revolution was not complete. Cognition did not force the older topics out of research. Indeed, researchers are still publishing studies related to both classical and operant conditioning in 2020, over 100 years after the concepts were first discovered. “What else is there to discover?” you may wonder. “Surely, researchers must have figured out everything about these concepts in 100 to 120 years, right?”

It is probably fair to say that we will never learn everything there is to learn about a topic. Instead, researchers can continue to make discoveries by going in at least two directions. First, they can examine smaller and smaller parts, or details, in the processes. For example, Honey, Dwyer, and Iliescu (2020) proposed a classical conditioning model that predicted that the association between the US and the CS is different from the reverse association between CS and US. By paying attention to this asymmetry, they were able to account for findings that had eluded prior models of classical conditioning. The second key direction that can extend the useful life of a topic indefinitely is to develop applications of a topic. For example, Frost et al. (2020) recently described the operant conditioning principles that underlie a set of behavioral interventions that have been successfully used to help children with autism spectrum disorders learn new skills.

a field which combines traditional cognitive psychological research methodology with advanced brain imaging techniques